dirty_cat.SimilarityEncoder¶

Usage examples at the bottom of this page.

- class dirty_cat.SimilarityEncoder(similarity=None, ngram_range=(2, 4), categories='auto', dtype=<class 'numpy.float64'>, handle_unknown='ignore', handle_missing='', hashing_dim=None, n_prototypes=None, random_state=None, n_jobs=None)[source]¶

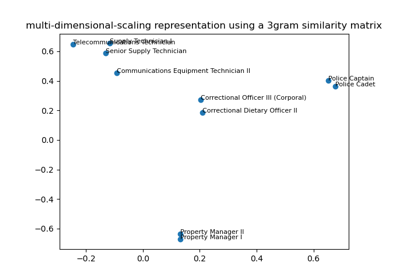

Encode string categorical features to a similarity matrix.

The input to this transformer should be an array-like of strings. The method is based on calculating the morphological similarities between the categories. This encoding is an alternative to

OneHotEncoderfor dirty categorical variables.The principle of this encoder is as follows:

Given an input string array

X = [x1, ..., xn]with k unique categories[c1, ..., ck]and a similarity measuresim(s1, s2)between strings, we define the encoded vector of xi as[sim(xi, c1), ... , sim(xi, ck)]. Similarity encoding of X results in a matrix with shape (n, k) that captures morphological similarities between string entries.To avoid dealing with high-dimensional encodings when k is high, we can use

d << kprototypes[p1, ..., pd]with which similarities will be computed:xi -> [sim(xi, p1), ..., sim(xi, pd)]. These prototypes can be automatically sampled from the input data (most frequent categories, KMeans) or provided by the user.

- Parameters:

- similarityNone

Deprecated in dirty_cat 0.3, will be removed in 0.5. Was used to specify the type of pairwise string similarity to use. Since 0.3, only the ngram similarity is supported.

- ngram_rangeint 2-tuple (min_n, max_n), default=(2, 4)

The lower and upper boundaries of the range of n-values for different n-grams used in the string similarity. All values of n such that

min_n <= n <= max_nwill be used.- categories{‘auto’, ‘k-means’, ‘most_frequent’} or list of list of str

Categories (unique values) per feature:

‘auto’ : Determine categories automatically from the training data.

list : categories[i] holds the categories expected in the i-th column. The passed categories must be sorted and should not mix strings and numeric values.

- ‘most_frequent’Computes the most frequent values for every

categorical variable

- ‘k-means’Computes the K nearest neighbors of K-mean centroids

in order to choose the prototype categories

The categories used can be found in the

categories_attribute.- dtypenumber type, default

float64 Desired dtype of output.

- handle_unknown‘error’ or ‘ignore’, default=’’

Whether to raise an error or ignore if an unknown categorical feature is present during transform (default is to ignore). When this parameter is set to ‘ignore’ and an unknown category is encountered during transform, the resulting encoded columns for this feature will be all zeros. In the inverse transform, an unknown category will be denoted as None.

- handle_missing‘error’ or ‘’, default=’’

Whether to raise an error or impute with blank string ‘’ if missing values (NaN) are present during fit (default is to impute). When this parameter is set to ‘’, and a missing value is encountered during fit_transform, the resulting encoded columns for this feature will be all zeros. In the inverse transform, the missing category will be denoted as None.

- hashing_dimint, optional

If None, the base vectorizer is a

CountVectorizer, otherwise it is aHashingVectorizerwith a number of features equal to hashing_dim.- n_prototypesint, optional

Useful when most_frequent or k-means is used. Must be a positive integer.

- random_stateint or RandomState, optional

Useful when k-means strategy is used.

- n_jobsint, optional

Maximum number of processes used to compute similarity matrices. Used only if fast=True in

transform().

See also

dirty_cat.MinHashEncoderEncode string columns as a numeric array with the minhash method.

dirty_cat.GapEncoderEncodes dirty categories (strings) by constructing latent topics with continuous encoding.

dirty_cat.deduplicateDeduplicate data by hierarchically clustering similar strings.

Notes

The functionality of

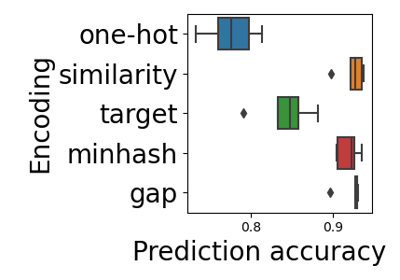

SimilarityEncoderis easy to explain and understand, but it is not scalable. Instead, theGapEncoderis usually recommended.References

For a detailed description of the method, see Similarity encoding for learning with dirty categorical variables by Cerda, Varoquaux, Kegl. 2018 (Machine Learning journal, Springer).

Examples

>>> enc = SimilarityEncoder() >>> X = [['Male', 1], ['Female', 3], ['Female', 2]] >>> enc.fit(X) SimilarityEncoder()

It inherits the same methods as the

OneHotEncoder:>>> enc.categories_ [array(['Female', 'Male'], dtype=object), array([1, 2, 3], dtype=object)]

But it provides a continuous encoding based on similarity instead of a discrete one based on exact matches:

>>> enc.transform([['Female', 1], ['Male', 4]]) array([[1., 0.42857143, 1., 0., 0.], [0.42857143, 1., 0. , 0. , 0.]])

>>> enc.inverse_transform( >>> [[1., 0.42857143, 1., 0., 0.], [0.42857143, 1., 0. , 0. , 0.]] >>> ) array([['Female', 1], ['Male', None]], dtype=object)

>>> enc.get_feature_names_out(['gender', 'group']) array(['gender_Female', 'gender_Male', 'group_1', 'group_2', 'group_3'], ...)

- Attributes:

- categories_list of

ndarray The categories of each feature determined during fitting (in the same order as the output of

transform()).

- categories_list of

Methods

fit(X[, y])Fit the instance to X.

fit_transform(X[, y])Fit to data, then transform it.

get_feature_names_out([input_features])Get output feature names for transformation.

get_most_frequent(prototypes)Get the most frequent category prototypes.

get_params([deep])Get parameters for this estimator.

Convert the data back to the original representation.

set_output(*[, transform])Set output container.

set_params(**params)Set the parameters of this estimator.

transform(X[, fast])Transform X using specified encoding scheme.

- fit(X, y=None)[source]¶

Fit the instance to X.

- Parameters:

- Xarray-like, shape [n_samples, n_features]

The data to determine the categories of each feature.

- yNone

Unused, only here for compatibility.

- Returns:

SimilarityEncoderThe fitted

SimilarityEncoderinstance (self).

- fit_transform(X, y=None, **fit_params)[source]¶

Fit to data, then transform it.

Fits transformer to X and y with optional parameters fit_params and returns a transformed version of X.

- Parameters:

- Xarray-like of shape (n_samples, n_features)

Input samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs), default=None

Target values (None for unsupervised transformations).

- **fit_paramsdict

Additional fit parameters.

- Returns:

- X_newndarray array of shape (n_samples, n_features_new)

Transformed array.

- get_feature_names_out(input_features=None)[source]¶

Get output feature names for transformation.

- Parameters:

- input_featuresarray-like of str or None, default=None

Input features.

If input_features is None, then feature_names_in_ is used as feature names in. If feature_names_in_ is not defined, then the following input feature names are generated: [“x0”, “x1”, …, “x(n_features_in_ - 1)”].

If input_features is an array-like, then input_features must match feature_names_in_ if feature_names_in_ is defined.

- Returns:

- feature_names_outndarray of str objects

Transformed feature names.

- get_most_frequent(prototypes)[source]¶

Get the most frequent category prototypes.

- Parameters:

- prototypeslist of str

The list of values for a category variable.

- Returns:

ndarrayThe n_prototypes most frequent values for a category variable.

- get_params(deep=True)[source]¶

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- property infrequent_categories_¶

Infrequent categories for each feature.

- inverse_transform(X)[source]¶

Convert the data back to the original representation.

When unknown categories are encountered (all zeros in the one-hot encoding),

Noneis used to represent this category. If the feature with the unknown category has a dropped category, the dropped category will be its inverse.For a given input feature, if there is an infrequent category, ‘infrequent_sklearn’ will be used to represent the infrequent category.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_encoded_features)

The transformed data.

- Returns:

- X_trndarray of shape (n_samples, n_features)

Inverse transformed array.

- set_output(*, transform=None)[source]¶

Set output container.

See Introducing the set_output API for an example on how to use the API.

- Parameters:

- transform{“default”, “pandas”}, default=None

Configure output of transform and fit_transform.

“default”: Default output format of a transformer

“pandas”: DataFrame output

None: Transform configuration is unchanged

- Returns:

- selfestimator instance

Estimator instance.

- set_params(**params)[source]¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.